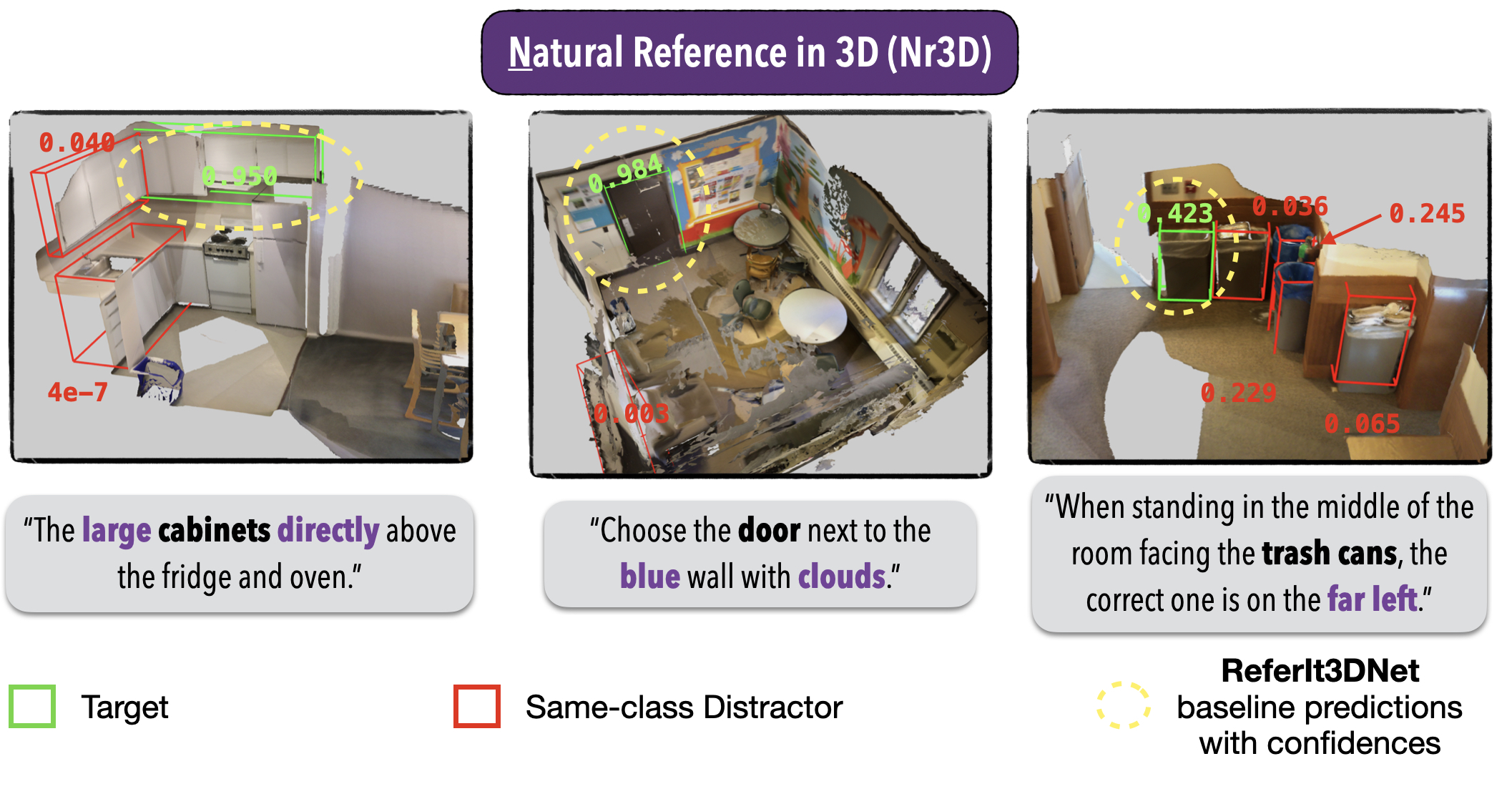

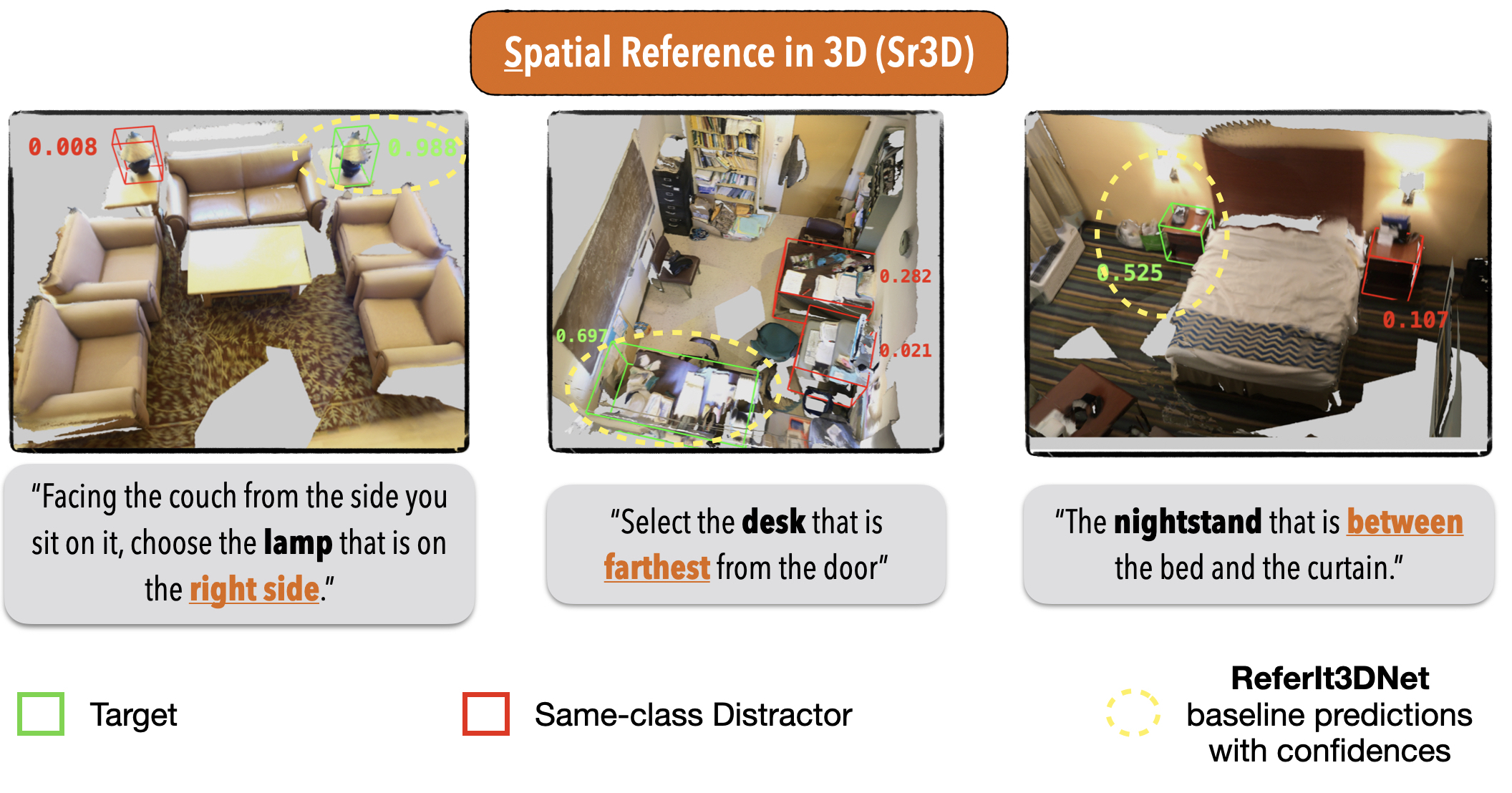

ReferIt3D Benchmarks

Intro

With the ReferIt3D benchmarks, we wish to track and report the ongoing progress in the emerging field of language-assisted understanding and learning in real-world 3D environments. To this end, we investigate the same questions present in the ReferIt3D paper and compare methods that try to identify a single 3D object among many of a real-world scene, given appropriate referential language.Specifically we consider:

- How well such learning methods work when the input language is Natural as produced by speaking humans referring to the object (Nr3D challenge) vs. being template-based concerning only Spatial relations among the objects of a scene (Sr3D challenge)?

- How such methods are affected when we vary the number of same-to-the-target-class distracting instances in the 3D scene? E.g., when handling an "Easy" case, where the system has to find the target among two armchairs vs. a "Hard" case, where it has to find it among at least three?

- Last, how such methods perform when the input language is View-Dependent e.g., "Facing the couch, pick the ... on your right side", vs. being View-Independent e.g., "It's the ... between the bed and the window".

Rules

Please use our published datasets following the official ScanNet train/val splits. Since in these benchmarks we tackle the identification problem among all objects in a scene (and not only among the same-class distractors), when using the Nr3D make sure to use only the utterances where the target-class is explicitly mentioned (mentions_target_class=True) and which where guessed correctly by the human listener (correct_guess=True).To download the pre-processed datasets that reflect exactly the same input we gave to our proposed network (where the filters mentioned above are pre-applied), use the following links:

Otherwise, If you want to download the raw datasets instead, please use the following links: (Nr3D, Sr3D).

Note: The official code of Referit3D paper for training/testing takes as input the raw datasets because it applies the filters mentioned above on the fly.

Nr3D Challenge

| Paper | Overall | Easy | Hard | View-Dependent | View-Independent |

|---|---|---|---|---|---|

| ReferIt3D | 35.6% | 43.6% | 27.9% | 32.5% | 37.1% |

| FFL-3DOG | 41.7% | 48.2% | 35.0% | 37.1% | 44.7% |

| Text-Guided-GNNs | 37.3% | 44.2% | 30.6% | 35.8% | 38.0% |

| InstanceRefer | 38.8% | 46.0% | 31.8% | 34.5% | 41.9% |

| TransRefer3D | 42.1% | 48.5% | 36.0% | 36.5% | 44.9% |

| LanguageRefer | 43.9% | 51.0% | 36.6% | 41.7% | 45.0% |

| LAR | 48.9% | 56.1% | 41.8% | 46.7% | 50.2% |

| SAT | 49.2% | 56.3% | 42.4% | 46.9% | 50.4% |

| 3DVG-Transformer | 40.8% | 48.5% | 34.8% | 34.8% | 43.7% |

| 3DRefTransformer | 39.0% | 46.4% | 32.0% | 34.7% | 41.2% |

| BUTD-DETR | 54.6% | 60.7% | 48.4% | 46.0% | 58.0% |

| MVT | 55.1% | 61.3% | 49.1% | 54.3% | 55.4% |

| ScanEnts3D | 59.3% | 65.4% | 53.5% | 57.3% | 60.4% |

| 3D-VisTA | 64.2% | 72.1% | 56.7% | 61.5% | 65.1% |

| ViL3DRel | 64.4% | 70.2% | 57.4% | 62.0% | 64.5% |

| CoT3DRef | 64.4% | 70.0% | 59.2% | 61.9% | 65.7% |

| Vigor | 59.7% | 66.6% | 53.1% | 59.2% | 59.9% |

| MiKASA | 64.4% | 69.7% | 59.4% | 65.4% | 64.0% |

| UniVLG | 65.2% | 73.3% | 57.0% | 55.1% | 69.9% |

Sr3D Challenge

| Paper | Overall | Easy | Hard | View-Dependent | View-Independent |

|---|---|---|---|---|---|

| ReferIt3D | 40.8% | 44.7% | 31.5% | 39.2% | 40.8% |

| Text-Guided-GNNs | 45.0% | 48.5% | 36.9% | 45.8% | 45.0% |

| InstanceRefer | 48.0% | 51.1% | 40.5% | 45.4% | 48.1% |

| TransRefer3D | 57.4% | 60.5% | 50.2% | 49.9% | 57.7% |

| LanguageRefer | 56.0% | 58.9% | 49.3% | 49.2% | 56.3% |

| SAT | 57.9% | 61.2% | 50.0% | 49.2% | 58.3% |

| LAR | 59.4% | 63.0% | 51.2% | 50.0% | 59.1% |

| 3DVG-Transformer | 51.4% | 54.2% | 44.9% | 44.6% | 51.7% |

| 3DRefTransformer | 47.0% | 50.7% | 38.3% | 44.3% | 47.1% |

| BUTD-DETR | 67.0% | 68.6% | 63.2% | 53.0% | 67.6% |

| MVT | 64.5% | 66.9% | 58.8% | 58.4% | 64.7% |

| NS3D | 62.7% | 64.0% | 59.6% | 62.0% | 62.7% |

| 3D-VisTA | 72.8% | 74.9% | 67.9% | 63.8% | 73.2% |

| ViL3DRel | 72.8% | 74.9% | 67.9% | 63.8% | 73.2% |

| CoT3DRef | 73.2% | 75.2% | 67.9% | 67.6% | 73.5% |

| MiKASA | 75.2% | 78.6% | 67.3% | 70.4% | 75.4% |

| UniVLG | 81.7% | 84.4% | 75.2% | 66.2% | 82.4% |